Experiments with E2E tests, GitHub Actions, Playwright, and Vercel

I maintain a couple of small websites. Thanks to their JAMstack architecture they are mostly running themselves with zero issues. But a few tasks still need to be done manually and I’m always looking for ways to automate them.

One such task is upgrading dependencies. Dependabot (❤️) already helps me with security and version updates. It automatically creates pull requests with new updates which (combined with an automated build pipeline) means I can update a dependency with a few clicks.

But before I can merge anything I still need to verify nothing broke. As these are small content websites I don’t have any automated tests.

I could just ignore dependencies and keep the websites running on older versions. I rarely use any of the new features anyway (I can always manually update if I need to) — and security updates are mostly irrelevant for simple blogs with no user interaction.

But I’m also running these websites to experiment. To learn new things. To try out new technologies. So I decided to give UI screenshot tests a try.

UI screenshot tests

I’ve had the following task on my todo list for a while:

“Try using UI snapshot tests”

I find the idea of taking a screenshot of my website and comparing it to a reference image interesting. It’s a very simple test to write and it would catch most issues a simple content website can have.

If my blog displays an image similar to my reference screenshot then it is doing what it is supposed to do.

It won’t catch any interaction issues (e.g., broken links) but it’s a good start.

Playwright

Setting up Playwright was straightforward. I followed the installation guide and had my first test running in just a few minutes.

I even wrote a few tests for Tribe of Builders to see what it is like:

import { test, expect } from '@playwright/test'

test('should have title', async ({ page }) => {

await page.goto('/')

await expect(page.locator('h1')).toContainText('Become a better builder.')

})

test('should have logo title', async ({ page }) => {

await page.goto('/')

await expect(page.locator('h2').nth(0)).toContainText('Tribe of Builders')

})Next up I wrote my first screenshot test:

import { test, expect } from '@playwright/test'

const guests = [

'alematit',

'buildwithmaya',

'adam-collins',

'alan-morel-dev',

'nico-jeannen',

]

for (const name of guests) {

test(`should match screenshot for guest post ${name}`, async ({ page }) => {

await page.goto('posts/' + name)

await expect(page).toHaveScreenshot({ fullPage: true })

})

}It runs through a few tribe guests and their story pages. E.g., https://www.tribeof.builders/posts/adam-collins.

As you can see it only takes a few lines of code to have a pretty powerful test.

The screenshot tests also ran smoothly. First time you run the tests, Playwright will capture screenshots and save them next to the test. If you run the test again it will compare with the saved images.

Wonderful. I now had a few tests, ready to integrate with my CI (GitHub Actions).

Playwright comes with its own template for running on GitHub. And if I just wanted to run regular E2E tests the template would have been sufficient. But screenshot tests are a bit more tricky than that (it turns out).

Screenshots taken on my laptop are unlikely to match 100% with screenshots taken on a GitHub agent. Playwright knows this, so if I take screenshots locally and try to run my tests on a different machine, it will take new screenshots if it detects a different operating system.

Taking screenshots using Github Actions

At this point I turned to the internet and found this great guide from Matteo. Much of what I will write next is based on what I learned from that post. I’m mostly relaying my overall experience here so if you want more details I highly recommend reading it.

To take screenshots on GitHub I created the following workflow:

name: Take screenshots

on:

workflow_dispatch:

defaults:

run:

working-directory: website

jobs:

run:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

- name: Install dependencies

run: yarn install --frozen-lockfile

- name: Install Playwright Browsers

run: npx playwright install --with-deps

- name: Run Playwright tests

run: npx playwright test --update-snapshots

- name: Commit changes

uses: EndBug/add-and-commit@v9

with:

message: 'screenshots updated'

committer_name: GitHub Actions

committer_email: actions@github.com

add: "['website/e2e/posts.spec.ts-snapshots/*.png']"Most of the steps are similar to the initial Playwright template. But I run the tests with --update-snapshots and at the end I commit any *.png changes to the snapshot folder.

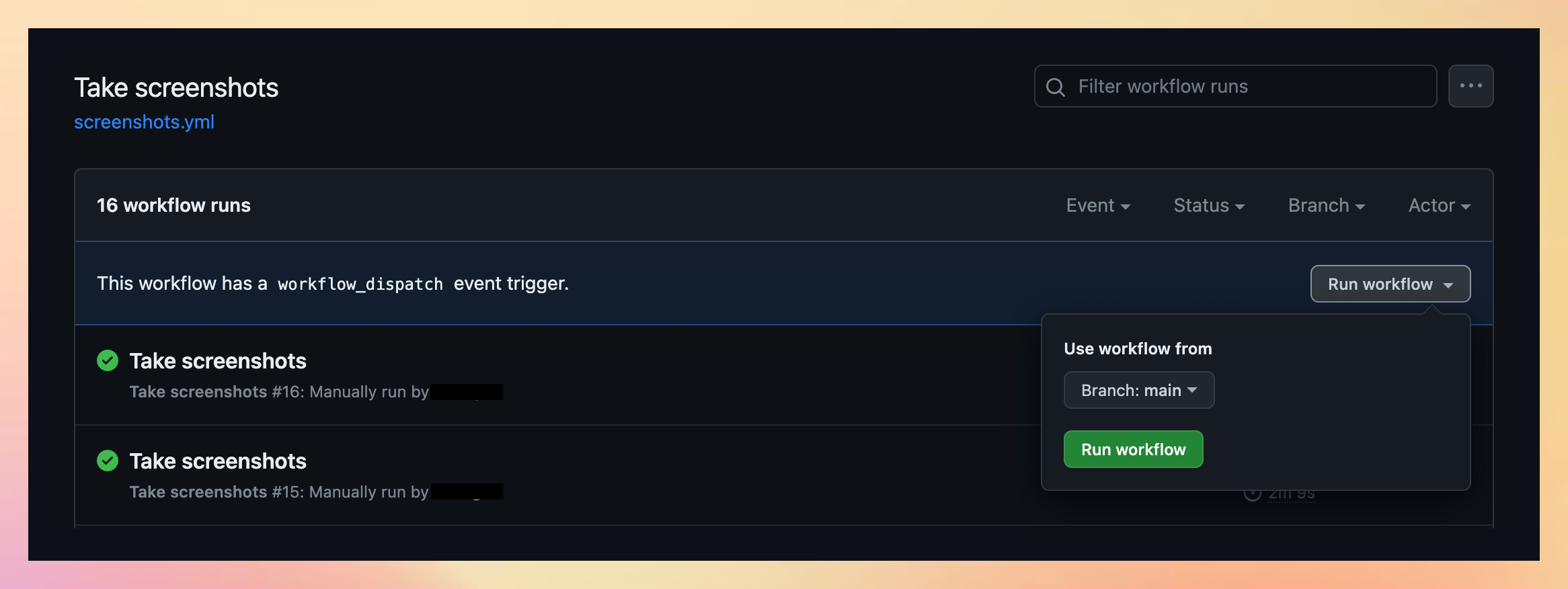

The action runs on workflow_dispatch which means I can trigger it manually by clicking a button:

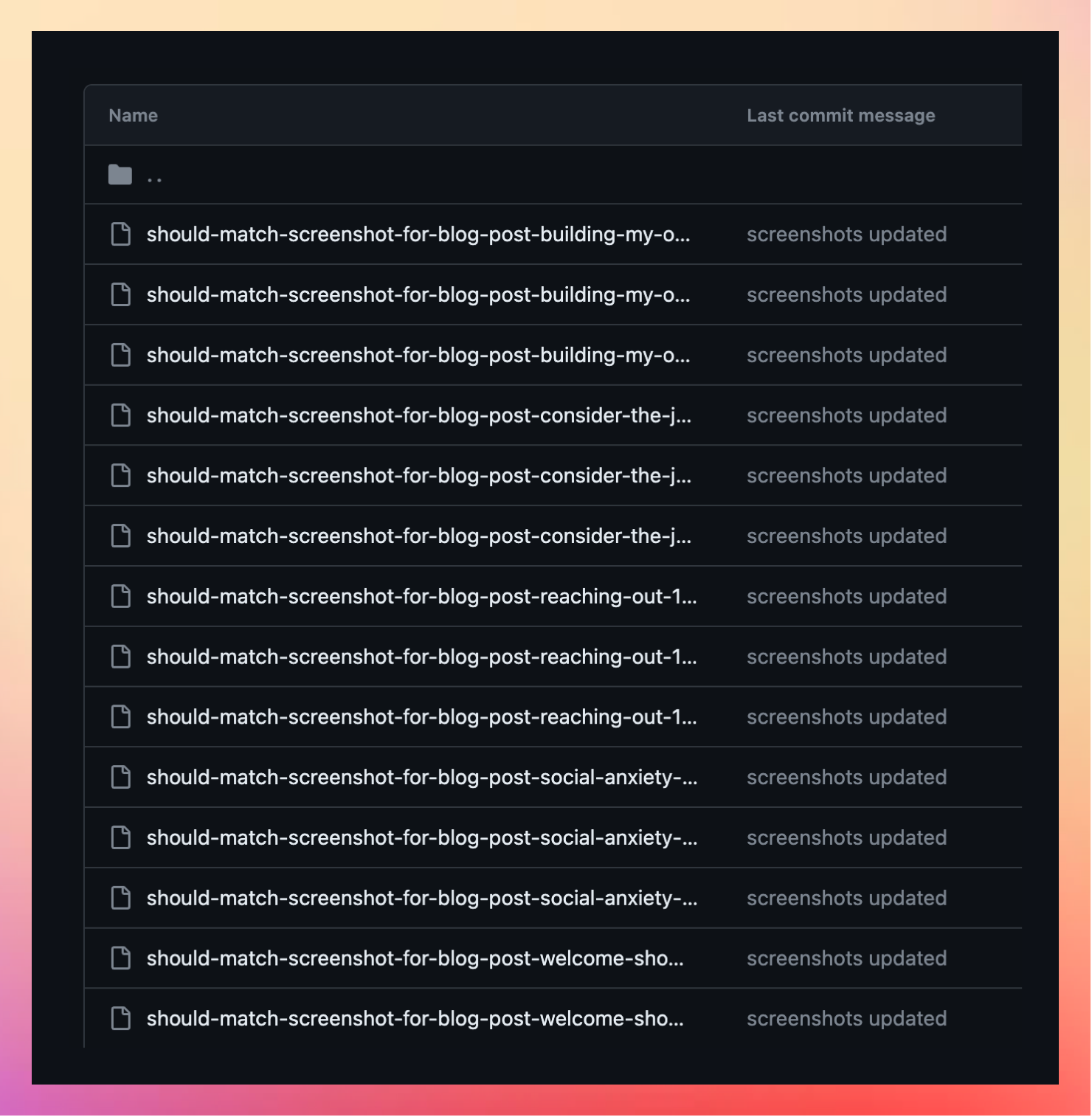

And the action will build my blog, take screenshots of it, and commit those screenshots:

This means I can now tell GitHub Actions to update my screenshots whenever I have made any breaking changes.

However, I won’t be able to tell it that in e.g., a PR where I have made those changes. Any code I merge that changes the UI will fail the screenshot test.

There are better solutions for sure. But this is enough for my small little experiment. Truth is I merge most of my code directly to main anyway. My E2E tests are purely for dependabot updates — and these should not break the UI.

Now that I have screenshots taken by the GitHub Action agent, I can run E2E tests against these screenshots:

name: Run e2e tests

on:

push:

branches: [ main, master ]

pull_request:

branches: [ main, master ]

defaults:

run:

working-directory: website

jobs:

test:

timeout-minutes: 60

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

- name: Install dependencies

run: yarn install --frozen-lockfile

- name: Install Playwright Browsers

run: npx playwright install --with-deps

- name: Run Playwright tests

run: npx playwright test

- uses: actions/upload-artifact@v3

if: failure()

with:

name: playwright-report

path: website/playwright-report/

retention-days: 30Much the same as before. Now just running the tests instead of taking new screenshots. The tests run on pull requests and changes to main.

Screenshot flakiness

So far I have experimented with screenshot tests on this blog and on Tribe of Builders. One is built using Astro and the other Next.js.

In both cases my initial screenshot tests were flaky. The screenshot images would differ slightly, causing the tests to sometimes fail with no changes to the code.

I have not fully investigated the root cause yet 😔. But to reduce flakiness I relaxed the visual comparison using the maxDiffPixelRatio setting. With that you can allow a ratio of pixels to differ in the screenshot comparison.

Let’s test on the real thing!

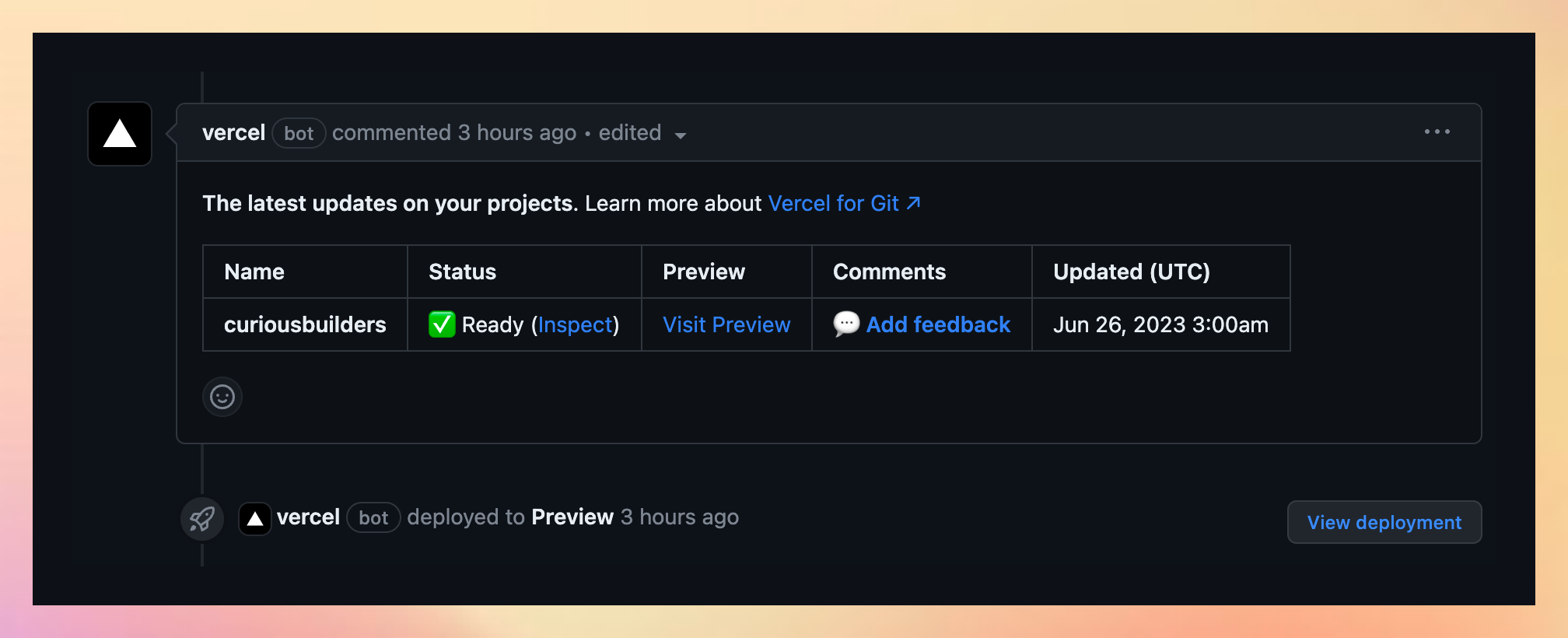

In Matteo’s blog post he describes how to run your tests against preview deployments on Netlify. Inspired by this I decided to see if I could do the same for my Vercel preview deployments.

In case you are unfamiliar with this concept: Vercel (and other providers like it) can build a preview deployment on your pull requests. An actual website where you can see your changes. Just like a production build.

Instead of running E2E tests against a local build, running tests against a preview URL would take me closer to the real thing.

To do this I needed:

- A way to get the Vercel preview URL

- A way to report the results of my E2E test in the PR

Luckily, I found both in this Automated Lighthouse metrics to your PR with Vercel and Github Actions.

This workflow runs a Lighthouse report on your Vercel preview URL. And with a few tweaks (replacing Lighthouse with Playwright) I made a scrappy E2E reporter for my UI tests:

Whenever I create a pull request the action will wait for Vercel to create a preview deployment and then it will run my UI tests against the preview deployment.

Below is the workflow file. It is hacked together and could surely be improved. But it works for now.

name: Run e2e tests on preview url

on:

issue_comment:

types: [edited]

defaults:

run:

working-directory: website

jobs:

e2e:

timeout-minutes: 30

runs-on: ubuntu-latest

steps:

- name: Capture Vercel preview URL

id: vercel_preview_url

uses: aaimio/vercel-preview-url-action@v2.2.0

with:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

- name: Add comment to PR

uses: marocchino/sticky-pull-request-comment@v2

with:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

number: ${{ github.event.issue.number }}

header: e2e

message: |

Running e2e tests...

- uses: actions/checkout@v3

- uses: actions/setup-node@v3

- name: Install dependencies

run: yarn install --frozen-lockfile

- name: Install Playwright Browsers

run: npx playwright install --with-deps

- name: Run Playwright tests

env:

WEBSITE_URL: ${{ steps.vercel_preview_url.outputs.vercel_preview_url }}

run: npx playwright test

- name: Add comment after test

uses: marocchino/sticky-pull-request-comment@v2

with:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

number: ${{ github.event.issue.number }}

header: e2e

message: |

🟢 Ran e2e tests!

- name: Add failed comment (if failed)

uses: marocchino/sticky-pull-request-comment@v2

if: failure()

with:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

number: ${{ github.event.issue.number }}

header: e2e

message: |

🔴 e2e tests failed: https://github.com/USER/REPO/actions/runs/${{ github.run_id }}

- uses: actions/upload-artifact@v3

if: failure()

with:

name: playwright-report

path: website/playwright-report/

retention-days: 30As you may have noticed (or not, there is a lot going on), I now provide a WEBSITE_URL env variable to tell Playwright what URL to target.

To do this required a few modifications to playwright.config.ts.

My baseUrl:

baseURL: process.env.WEBSITE_URL ? process.env.WEBSITE_URL : "http://127.0.0.1:3000",and webServer:

webServer: process.env.WEBSITE_URL

? undefined

: {

command: "yarn dev",

url: "http://127.0.0.1:3000",

reuseExistingServer: !process.env.CI,

},Conclusion

I find it pretty amazing what you can do with a few .yml files and some of the many wonderful tools that are available (for free!). I didn’t have to write anything from scratch. I didn’t have to spend hours writing complex test specs. Yet now I have automated E2E tests for two of my websites.

I have used these tests for a while now and they give me a certain confidence when bumping package versions. I now briefly look at the test results and merge the version bump PRs made by dependabot. Before this I would manually inspect the preview deployments which felt like a waste of time.

I did spend quite some time setting up my E2E tests so they have definitely not paid off yet. But in time they may. And with the lessons learned I’m glad I ran this experiment.

Notes

- I ran into some issues testing my Astro blog using Github Actions. The tests would run just fine locally but would fail with Error: Timed out waiting 60000ms from config.webServer. So instead of taking local screenshots I decided to use my actual website. For curious.builders my reference screenshots are from the live production site and I then compare these with Vercel preview URLs.

- Automating visual UI tests with Playwright and GitHub Actions

- Astro docs for Playwright testing

- Next.js docs for Playwright testing